The bidirectional design system: When code talks back to design

How AI is shifting design systems from one-way handoffs to continuous, two-way sync – and what that means for how we build products

TL;DR: Most design systems behave like static libraries – frozen answers to yesterday’s problems. The next generation acts like responsive engines.

With Figma’s MCP server, extended collections, slot-based components, and auditing tools, design systems can finally create continuous feedback loops. Code reveals edge cases, accessibility improvements flow upstream, and the system adapts in real time. The design system stops being a mirror of the past and becomes a map for what’s next.

The upside: speed, fidelity, and systems that evolve with your product.

The risk: amplified entropy if structure is weak.

Before you turn on bidirectional sync, get your tokens, component contracts, and governance airtight.

For years, we’ve treated design and code as separate disciplines with information flowing in only one direction. Design files become specifications. Specifications become implementation. Implementation drifts from intent.

The drift is inevitable when you treat design as upstream and code as downstream. When designers create in isolation, then pass their work along. When developers implement what they’re given, then discover reality doesn’t match the plan. When the space between design and code is a chasm instead of a conversation.

But here’s the thing: the best work has never happened this way. The best work happens when designers understand implementation constraints. When developers contribute to design decisions. When the boundaries blur and both sides are in it together.

That collaborative model has always existed on the best teams. What’s changing is that the tools are finally catching up.

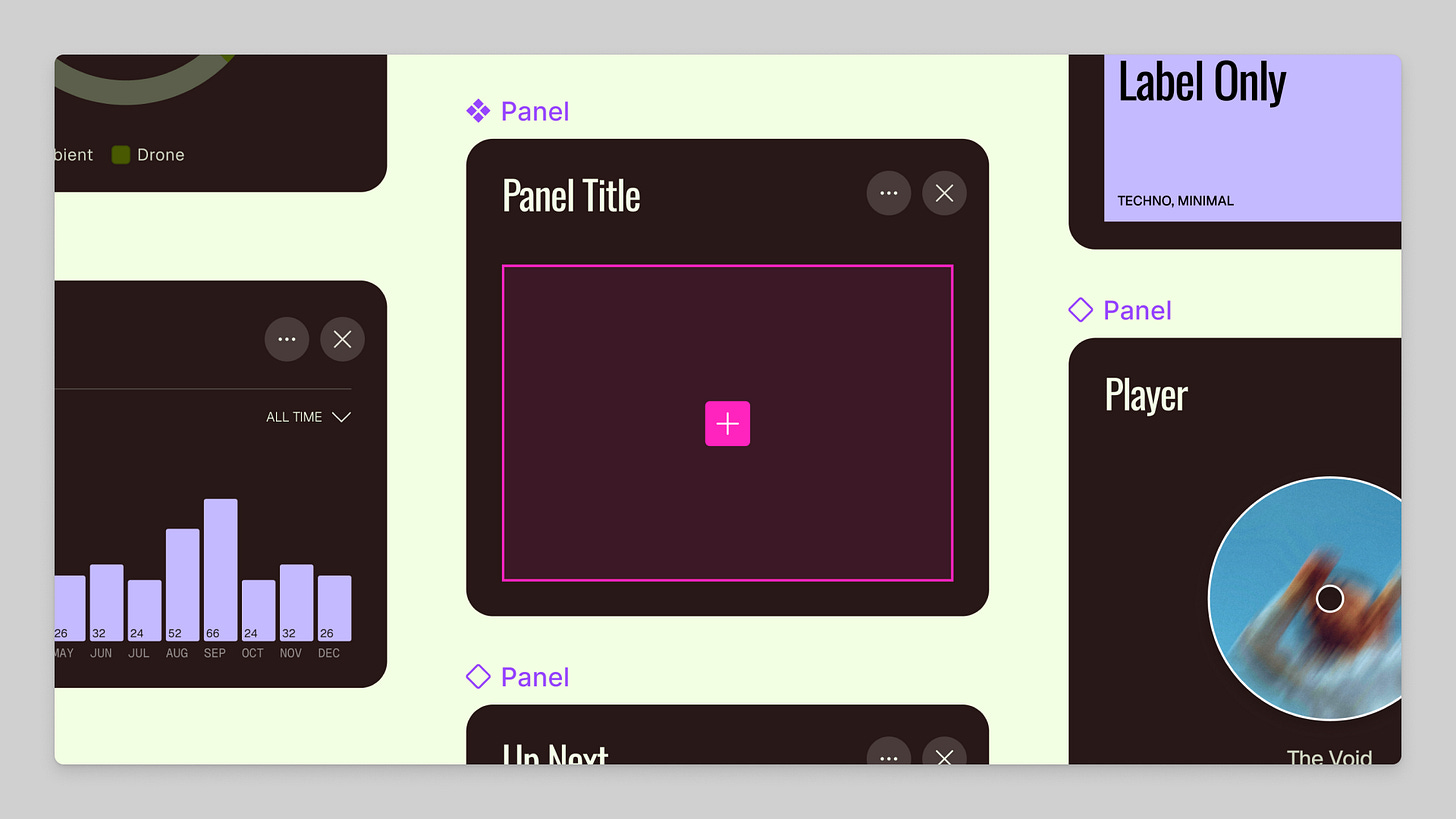

At Schema 2025, Figma announced a suite of features that make something new possible: design systems that don’t just push information to code, but pull knowledge back from it. With the Figma MCP server, extended collections, slot-based components, and new auditing tools, we’re seeing the infrastructure for design systems that learn from implementation, not just dictate to it.

The question isn’t whether your design system can generate code anymore. It’s whether your design system can have a conversation with it.

Most design systems today still behave like static libraries – collections of frozen answers to yesterday’s problems. The next generation will act more like responsive engines: systems that adjust, recalculate, and feed on new inputs as they arrive. It’s the difference between publishing documentation and maintaining a living organism.

The one-way street we’ve been living on

I’ve written before about design systems becoming APIs for AI agents. The core argument was simple: if AI agents are going to consume your design system, you need to structure it with machine-readable semantics, not just human-friendly conventions.

That article focused on making components interpretable – treating your design system like an API with clear contracts, explicit props, and semantic naming. It was about making the one-way street smoother, and making sure that when AI reads your design system, it understands what it’s looking at.

But that was only half the picture. The logical next step – the one I’ve been thinking about for the last few months – is what happens when the street becomes two-way.

Right now, even on collaborative teams, the design-to-code workflow has an asymmetry problem. Design establishes intent, engineering implements it, and then reality complicates things with async states, error handling, and edge cases. Code adapts to reality, but design files don’t. The design system becomes aspirational rather than actual – engineers build what works, designers document what should work, and the gap widens.

This isn’t a failure of process – it’s a limitation of tooling. Even when designers and developers work closely together, the tools themselves enforce a one-way information flow.

Design tools create specifications, development tools implement them. There’s no mechanism for implementation insights to flow back upstream and inform the system.

Until now, the wall between design and code isn’t gone – it’s starting to breathe.

What bidirectional actually means

Bidirectionality isn’t a technical feature. It’s a philosophical shift – from documentation to dialogue.

When people talk about “bidirectional sync” in design systems, they often mean variable syncing or token export. You update a colour in Figma, run a script, and that colour updates in your codebase.

That’s not bidirectional. That’s just automated one-way sync.

True bidirectional sync means the relationship between design and code becomes conversational. It means:

When a developer implements better error handling, that pattern informs the design system

When an AI agent discovers a more accessible component structure, that knowledge flows upstream

When production reveals edge cases, the design system captures them as first-class patterns

When code solves real problems, those solutions become design system documentation

In a sense, we’re moving from static specifications to systems with reflexes. When code changes expose something new – an edge case, a better state pattern, a smarter interaction – the system shouldn’t need a meeting to adapt. It should have the reflex to learn from it.

This isn’t about designers handing off work and developers sending corrections back. It’s about design and engineering being a continuous loop. Some of the best design thinking happens in code. Some of the best engineering thinking happens in design tools. The tools should support this reality.

For designers who understand code (and there are more of us every year), this is liberating. You’re not just creating specifications – you’re creating systems that learn from implementation. For engineers who think about design (and there are more of them too), this means their insights become part of the system, not just implementation details.

Think about it like this: your design system is currently a broadcast. It should become a dialogue.

The plumbing that makes this possible

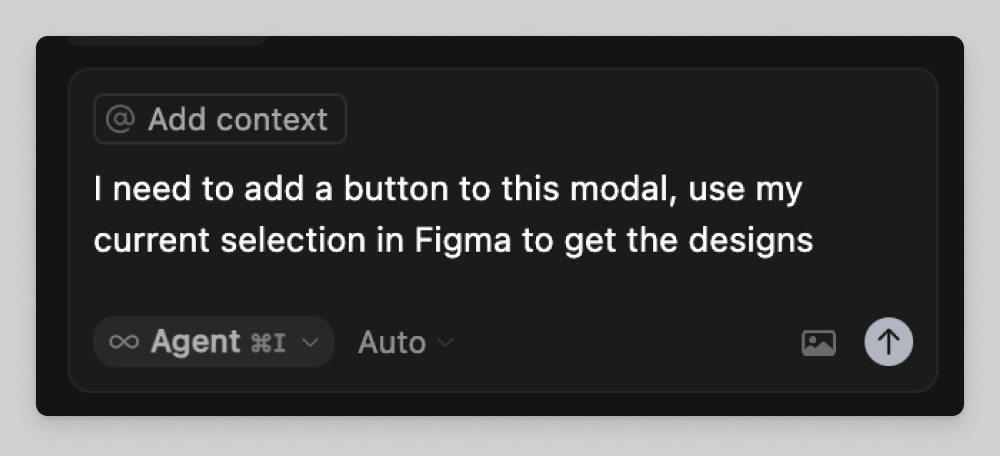

Figma’s MCP (Model Context Protocol) server is the infrastructure that makes this possible. Announced in beta earlier this year and expanded at Schema 2025 (October 2025), where it moved out of beta and became generally available to all users. The MCP server allows AI coding agents to access Figma’s design data directly – not through screenshots, but through structured, semantic information about components, variables, layout systems, and responsive behaviour.

The official Figma MCP server currently provides read-only access to design data. It lets AI agents query your Figma files and generate code that respects your design system. But the protocol itself supports bidirectional communication – and that’s where things get interesting.

Third-party implementations are already exploring write capabilities. Community-developed MCP servers like cursor-talk-to-figma enable developers to create and modify Figma elements directly from their IDE. These servers can create rectangles, update text content, set fill colours, adjust corner radius, and manage annotations – all programmatically.

The architecture exists. The protocol supports it. Figma has explicitly hinted at this future, noting that the MCP server could become “a two-way connector, pulling external context back into Figma.”

The most exciting part isn’t code generation – it’s calibration. Each change, each implementation nuance, becomes another signal that helps the system fine-tune itself between design intent and reality.

What changes when code can inform design

Let’s walk through a real scenario.

Your team is building a card component. Early conversations identify three variants: default, highlighted, and disabled. Clean. Simple. Everyone agrees.

Then you start building.

The engineer implementing it realises cards need a loading state because they fetch data asynchronously. Fair enough. But then API errors happen, so there’s an error state. Archived items need a read-only state. Incomplete data needs a partial state. The complexity emerges from reality.

In a traditional model, these states get built but exist only in code. Maybe they get documented in Storybook. Maybe someone files a ticket to update the design system. Maybe that ticket sits in the backlog for months. By the time the design system catches up, the implementation has evolved further. The design system becomes documentation of intent, not reality.

In a bidirectional model, something different happens.

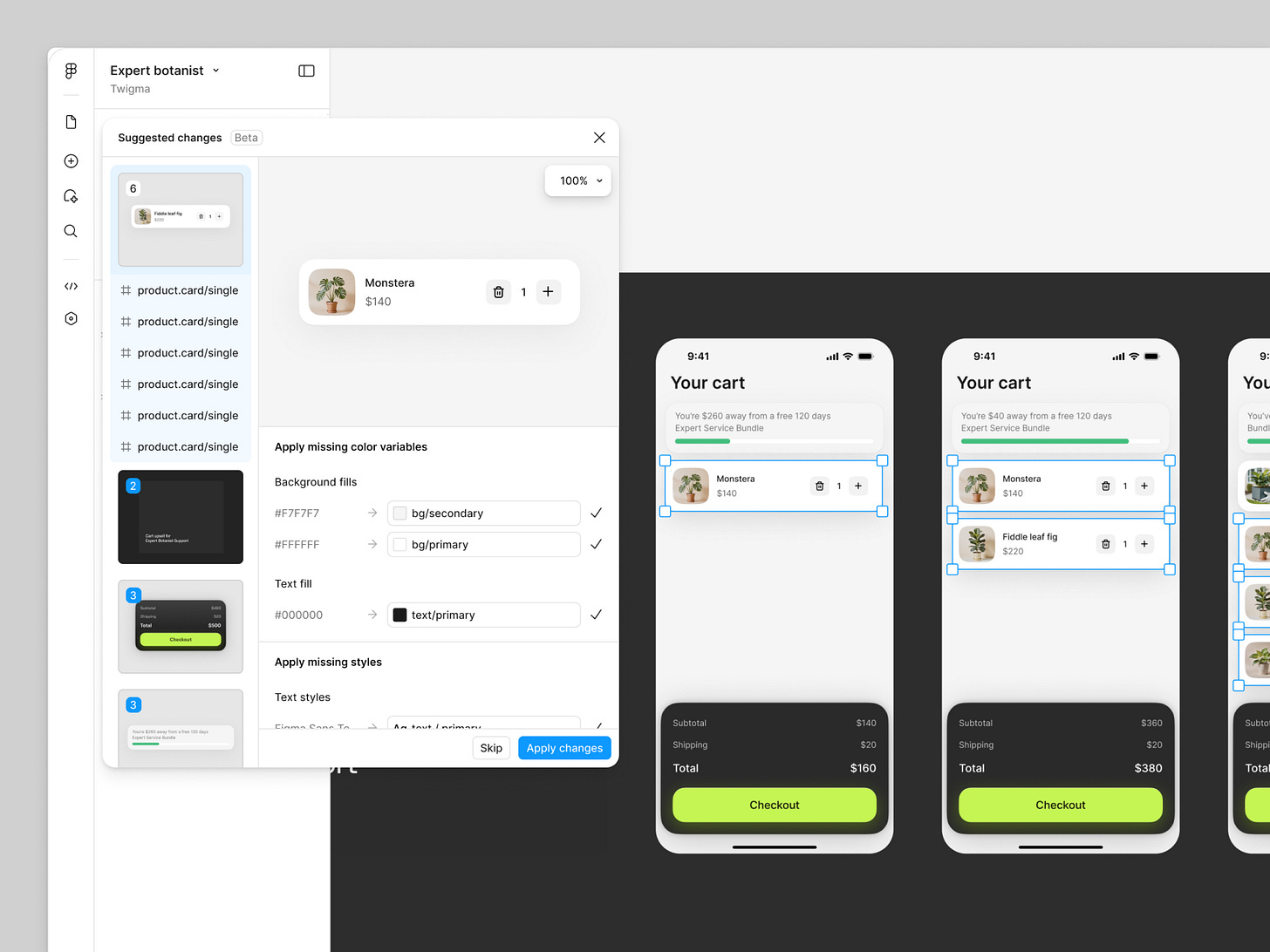

An AI agent helps implement the loading state. The system flags it: “I notice this state doesn’t exist in the design system. Should this be documented as a pattern?”

The engineer builds better error handling. The system suggests: “This error pattern could be valuable to other components. Should we create tokens for error states?”

Accessibility improvements, for better real-world parity, get refactored into the component. The changes flow back to Figma, updating not just the visual representation, but the underlying structure – semantic markup, keyboard navigation patterns, ARIA attributes.

The design system doesn’t become less designed – it becomes more comprehensive, capturing the collective intelligence of everyone who builds with it, not just those who initially specified it.

The goal isn’t just for designers to teach the system how to build. It’s for the product itself to start teaching the system how it’s actually used. That’s when a design system stops being a mirror of the past and starts becoming a map for what’s next.

The Config 2025 pieces of the puzzle

Config 2025 and Schema 2025 announced features that start to make this vision tangible.

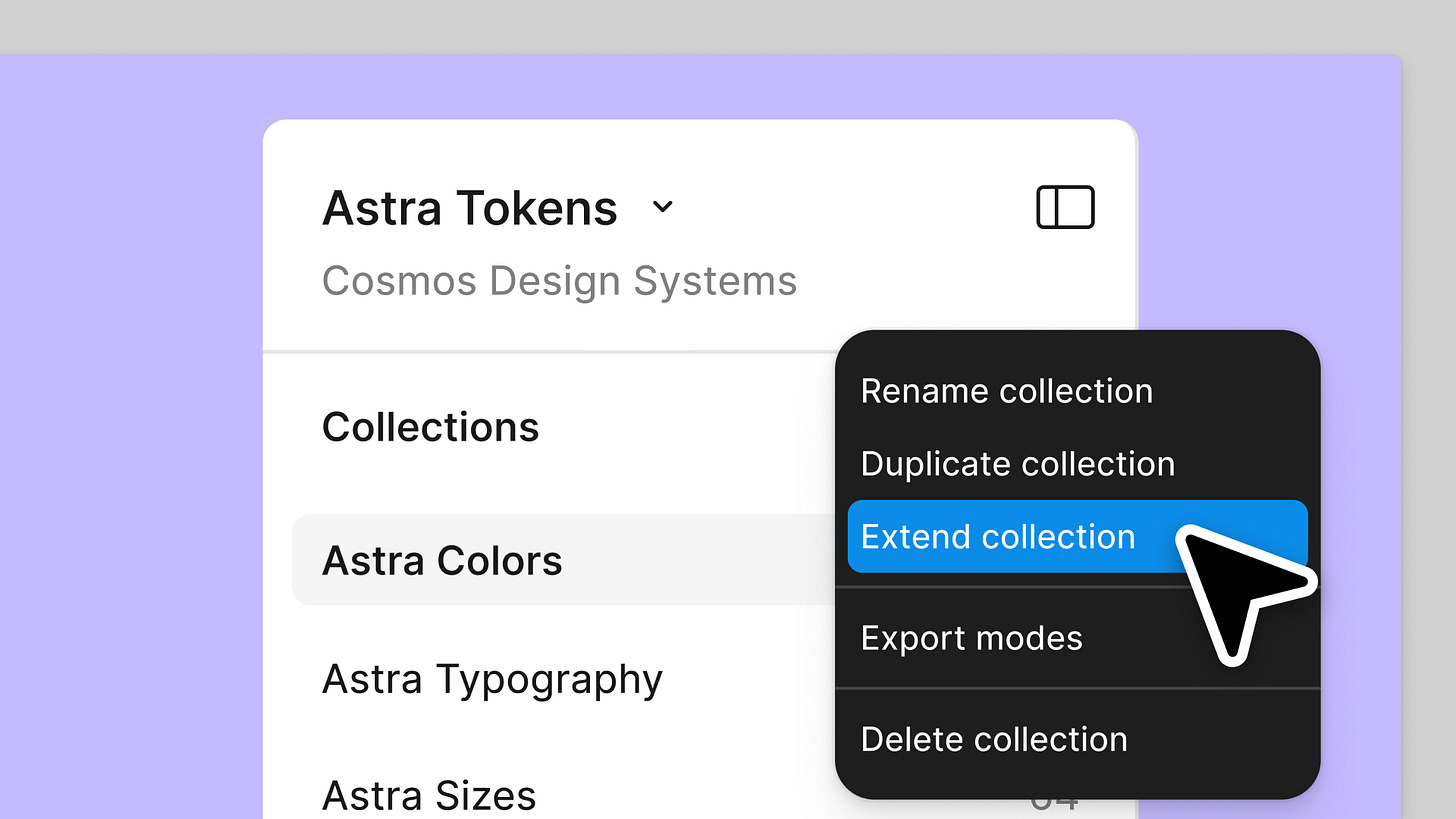

Extended Collections allow multi-brand design systems where child systems inherit from a parent, automatically receiving updates. This creates the infrastructure for propagating changes not just downstream (parent to child), but potentially upstream (capturing learnings from child implementations to inform the parent).

Slots let you add layers within component instances without detaching, creating more flexible components that reduce the need for duplication. This flexibility becomes critical when code needs to adapt designs for real-world edge cases – you need components that can stretch without breaking their connection to the system.

Check Designs audits your files for inconsistencies, flagging layers that should use design system tokens but don’t. This is your drift detector – the system that spots when design and code have diverged. In a bidirectional world, this doesn’t just flag problems; it proposes solutions based on what actually exists in code.

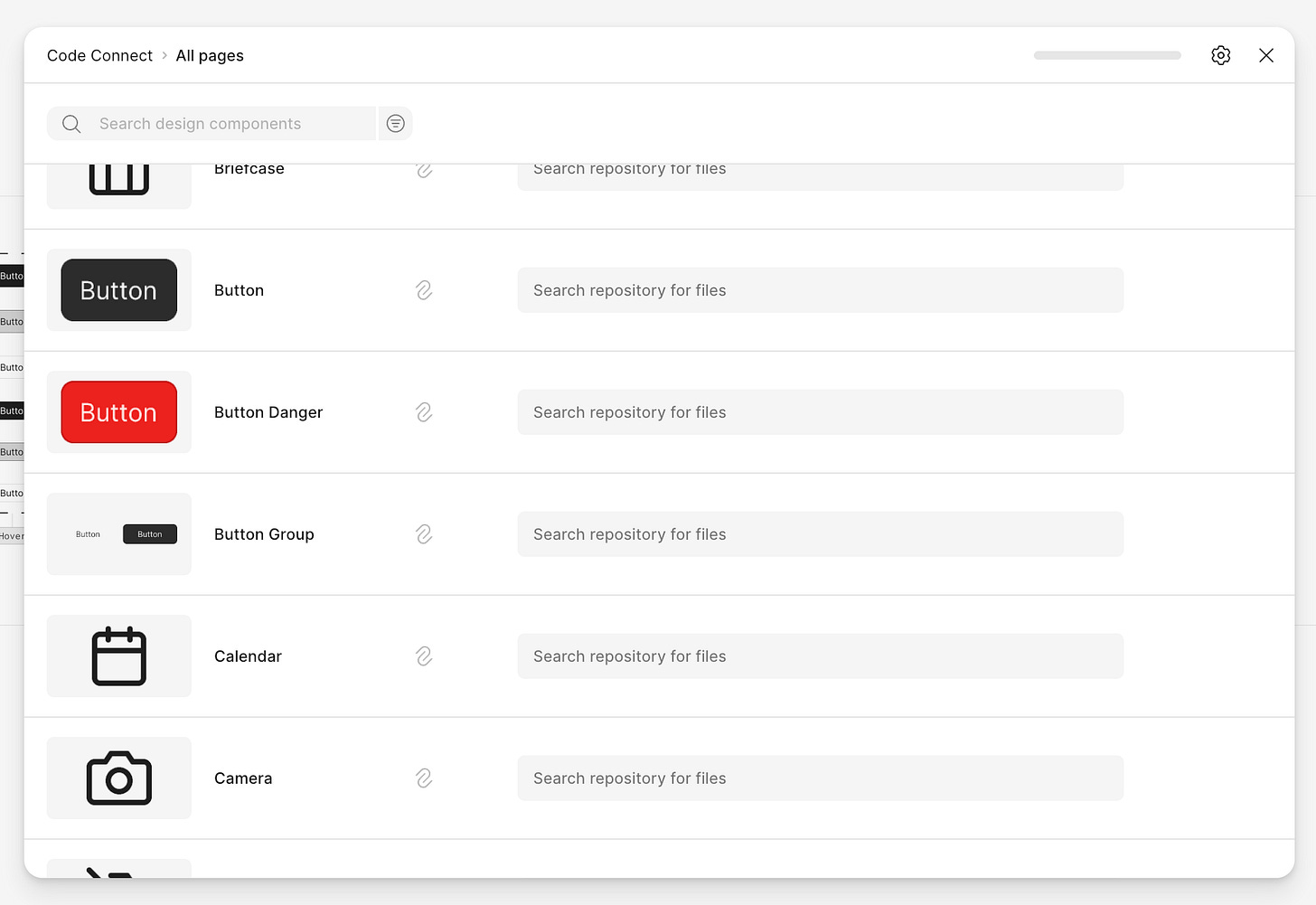

Code Connect UI makes it easier to map Figma components to actual codebase components, using AI to suggest the right code files. These mappings are foundational – you can’t have meaningful bidirectional sync without knowing which design components correspond to which code components. This is the Rosetta Stone that makes translation possible.

Figma MCP server is now out of beta and generally available, with the ability to add guidelines for how AI should adhere to your design system. This is the actual technical infrastructure that enables the conversation – the protocol that lets AI agents read from Figma and (through third-party implementations) potentially write back to it.

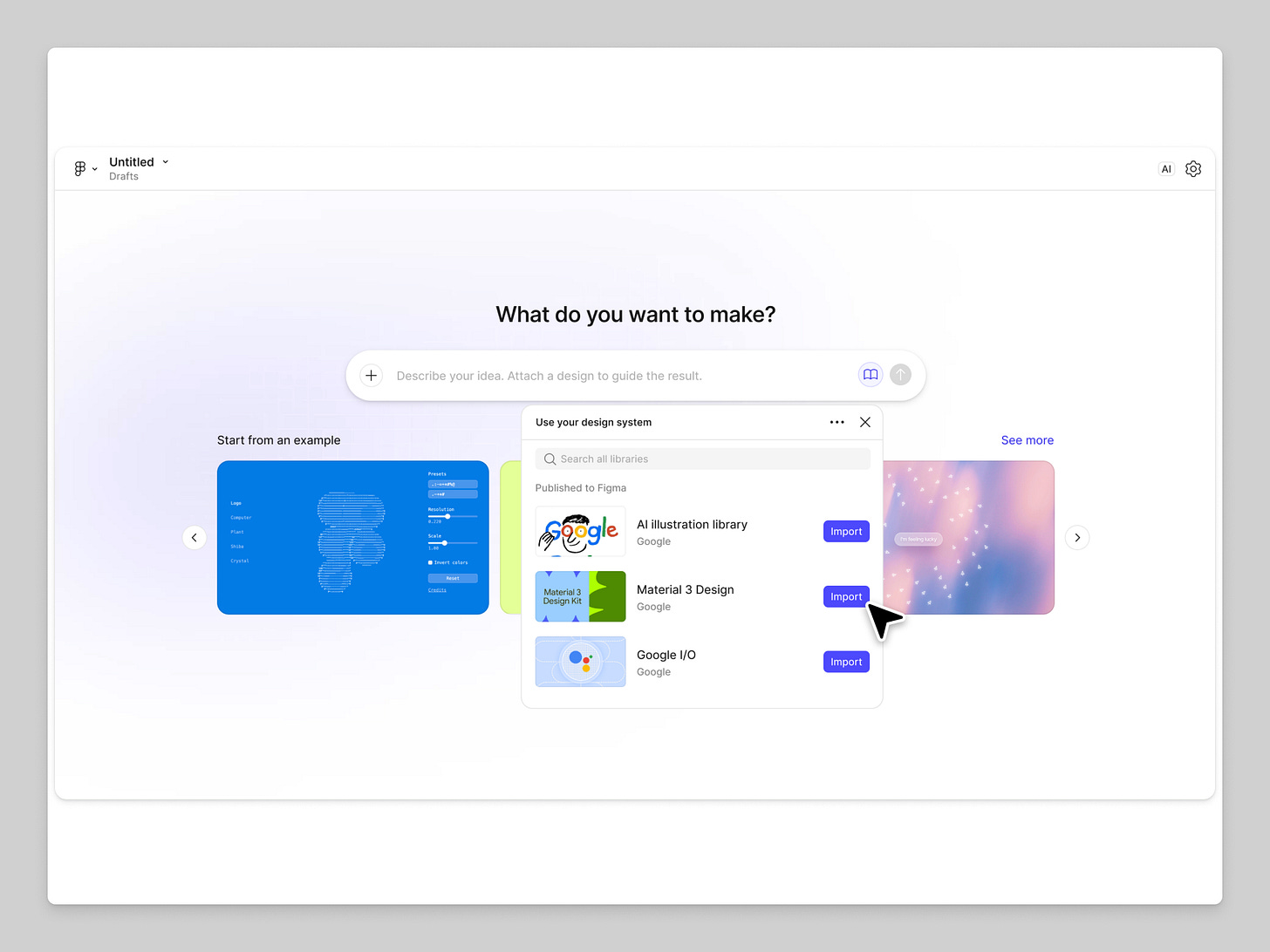

Make Kits bring your Figma design libraries into AI prototyping. But more importantly, they create a bridge where AI-generated code can reference and extend your design system, creating a feedback loop where rapid prototyping informs system evolution.

None of these features alone creates bidirectional sync. But together, they’re building the foundations for a system that can receive information, not just send it.

Put simply, we’re watching design systems evolve from design-time libraries into runtime systems – ones that don’t stop at describing the ideal, but learn from what’s real.

The best design systems won’t just describe how things should be built – they’ll learn from how things actually are.

The hard problems beneath the hype

Let’s be honest about what makes this complicated.

First, there’s the question of authority. If both design and code can propose changes, how do you maintain coherence? How do you prevent well-intentioned chaos?

This is where design system governance becomes critical. But not governance as gatekeeping. Governance as collaborative decision-making. You need clear criteria for what makes a good pattern. You need processes that value implementation insights as much as design intent. You need to know which changes strengthen the system and which fragment it.

The best teams already operate this way. Engineers propose component variants. Designers implement accessibility fixes. Everyone contributes to the system. Bidirectional tooling just makes these contributions easier to capture and formalise. Without clear structure, a bidirectional system doesn’t get smarter – it just metabolises chaos faster.

Second, there’s the semantic challenge. Code and design speak different languages. A developer might implement a “loading” state using CSS transitions and JavaScript promises. How does that translate back to Figma? What’s the meaningful abstraction that should flow upstream?

This requires systems that can identify patterns, not just copy implementations. AI helps here – much like how analytics transformed baseball by finding patterns others missed, it can analyse code changes and propose meaningful updates to the design system. But it requires sophisticated pattern recognition and a deep understanding of both design and development constraints.

Third, there’s the risk of entropy. Remember my article about the hidden cost of design system entropy? AI doesn’t fix your design system – it amplifies it. If you open bidirectional sync on a messy system, you won’t get order. You’ll get amplified mess.

The discipline required for machine-readable design systems becomes even more critical when those systems can be modified programmatically. Your naming conventions, your token structure, your component architecture – all of these need to be airtight and enforced before you consider bidirectional workflows.

How teams can start building toward bidirectionality

This isn’t science fiction. The infrastructure exists. The early implementations are already being tested. The question isn’t if bidirectional design systems will happen – it’s when your team will be ready for them.

Here’s what that preparation looks like:

Technical preparation

Audit your current system for machine readability. Go back and read about treating your design system like an API. Are your component names semantic? Do your props communicate intent? Are your tokens consistently applied? If AI can’t read your system clearly now, bidirectional sync will only create confusion.

Clean up your design tokens. Make sure they’re semantic, consistently applied, and properly documented. These will be the first things to benefit from bidirectional workflows.

Document your component contracts. Understanding APIs and how they work becomes essential. Every component should have a clear contract – what it does, what props it accepts, what constraints it operates within. This documentation becomes the shared language that allows code changes to be meaningfully translated back to design.

Start experimenting with Figma’s MCP server if you have Dev Mode access. Even in read-only mode, you’ll learn how AI agents interact with your design system and where the gaps are.

Watch the community implementations. Third-party MCP servers are experimenting with write capabilities now. Their learnings will inform what becomes possible at scale.

Cultural preparation

Establish collaborative governance models. Who contributes to the design system? How do proposals get evaluated? What’s the process for a code-originated pattern to become official? These questions matter less about hierarchy and more about ensuring quality. The most successful design systems have clear empathy-driven adoption strategies where everyone who builds with the system can contribute to it.

Create feedback loops between design and engineering. Start small. What if engineers could propose new patterns directly? What if designers could see which components are causing implementation friction? These human feedback loops teach you what bidirectional sync should look like before you automate it.

Strengthen your design-to-development collaboration. The teams that will benefit most from bidirectional systems are the ones already working as one team. If design and engineering still operate in silos, fix that before adding technical complexity.

Question your assumptions about “source of truth.” Maybe the source of truth isn’t a single file or codebase. Maybe it’s the system of constraints, patterns, and decisions that exists across both.

Where this leads

The move toward bidirectional design systems is part of a larger transformation in how we build products. For years, we’ve organised work into discrete stages: research, design, development, QA, deployment. Each stage passes work to the next. Information flows forward, rarely backward.

But increasingly, we’re building systems that learn and adapt in real time. Products that respond to how people actually use them, not just how we thought they would. Designing for outcomes rather than features means creating feedback loops everywhere.

Bidirectional design systems acknowledge that the best source of truth isn’t the initial design or the final code – it’s the conversation between them. For designers, this means recognising that some of the best design thinking happens during implementation. For developers, it means understanding that implementation decisions are design decisions. For both, it means getting comfortable with systems that evolve through contribution rather than systems that are specified once and locked down.

The future isn’t more control; it’s better feedback. Systems that don’t just learn from use – they adapt to it. They recalibrate, evolve, and keep their footing as products shift under them. That’s the real future of design systems: not control, but conversation. Not documentation, but intelligence in motion.

Thanks for reading! Subscribe for free to receive new posts directly in your inbox.

This article is also available on Medium, where I share more posts like this. If you’re active there, feel free to follow me for updates.

I’d love to stay connected – join the conversation on X and Bluesky, or connect with me on LinkedIn to talk design systems, digital products, and everything in between. If you found this useful, sharing it with your team or network would mean a lot.